What We Have to Fear from AI

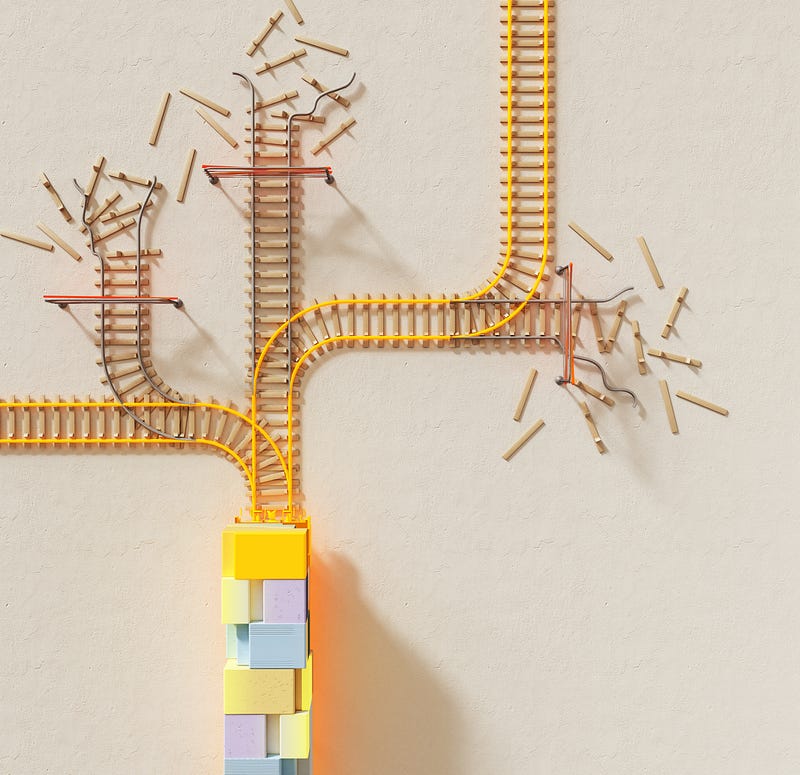

The most dangerous consequence of AI proliferation is not a Terminator-style future, it’s people passing laws.

Artificial Intelligence (AI) has become a hot-button topic the last few years, with the release of new language-based models to the public. While not the general or self-aware AI that science fiction has trained us to expect, these large language models (LLMs) present incredible potential for human betterment, as well as phenomenal potential for our self-destruction.

the deceptive use of AI could make it virtually impossible to determine who is truly speaking in a political communication

However, in the middle of the discussions about AI, there is one that frequently goes uninspected: artificial intelligence and the law.

By October of 2023, it became clear (even to those only passingly familiar with AI) that there would be massive trouble ahead for our society. From the call center workers in India who were fired and replaced with an LLM, to the sudden fearful flurrying in the halls of State over the idea of “deepfake” political ads.

“Unchecked, the deceptive use of AI could make it virtually impossible to determine who is truly speaking in a political communication, whether the message being communicated is authentic or even whether something being depicted actually happened … this could leave voters unable to meaningfully evaluate candidates and candidates unable to convey their desired message to voters, undermining our democracy,” said Trevor Potter, former chair of the Federal Election Commission.

For those not aware: “deepfake” is a term for AI-generated content (especially photographic, video, and audio content) that is so realistic that it is nearly impossible to tell it apart from the real thing.

Are deepfakes a serious problem? Oh gods, yes. I teach the basics of internet security as a library specialist, and, let me tell you, the rising prevalence of high-tech phishing and malicious scamming is a real problem. I’ve written about this problem at length.

When it comes to the security of our democracy, our 17th-century through 19th-century legal and political systems cannot keep up. The politicians have every reason to be concerned.

The problem is: where does that concern lead us?

We must absolutely avoid enshrining corporate dictatorship in the name of self protection

On one hand, we’re liable to dive into ever murkier pools of chaotic mess. AI will make it hard, maybe even impossible, to tell fact from fiction. Even who you’re speaking to on the phone will be in doubt, and millions of Americans will fall easy pray to political AI that convince them to vote one way or another.

On the other hand, we have reactionary authoritarianism. In order to fight back against AI, all sectors of AI research and use become “regulated.” This sounds good, until you realize that all this means is our government bestows massive benefits and authority on a small number of hyper-powerful corporations (the same ones responsible for creating this, and all the other messes that we’re currently dealing with).

We absolutely will need new laws, new systems of governance, new programs of instruction to help ordinary people come to terms with the new world we’re living in: but we must absolutely, first, ensure that we don’t enshrine corporate dictatorship in the name of self protection.

Fear-based decisions lead us down a path of nightmares. Love-based decisions could see the dream of AI birth new life into every corner of our weary and inchoate society.

The choice between those two paths is not even: our culture runs on fear. But, our psychology runs on care for others, on care for the group, and on the foundational natural principle of mutual aid. If we can follow these better angels of our nature, we could avert the worst crises to come, and start dealing with those that have already arrived.

Hi there! I’m Odin Halvorson, a librarian, independent scholar, film fanatic, fiction author, and tech enthusiast. If you like my work and want to support me, please consider subscribing!