Reference Librarians and AI Tools: Exploring Groq for Reference Requests

AI is coming in like a wave, and librarians should be right there at the crest.

AI is coming in like a wave, and librarians should be right there at the crest.

The rapidly evolving landscape of artificial intelligence (AI) is transforming the way we approach research and information seeking. As AI tools become increasingly sophisticated, they are opening up new possibilities for librarians and researchers to explore complex topics and identify relevant sources.

Because of this, I set out to explore the potential of AI-powered tools to support research in marketing campaigns, aligning with the work I do as my day-job, specifically focusing on the personalization of email campaigns. My goal was to have the AI take on the role of a professional research librarian and, under that role’s structure, help me narrow down my focus for my research project.

I decided to use Groq for this, which is rapidly becoming my preferred AI tool. While it is not yet fully multimodal through its cloud-based chat interface, it outperforms ChatGPT 4o in a number of key areas and significantly pushes forward the boundaries of AI development.

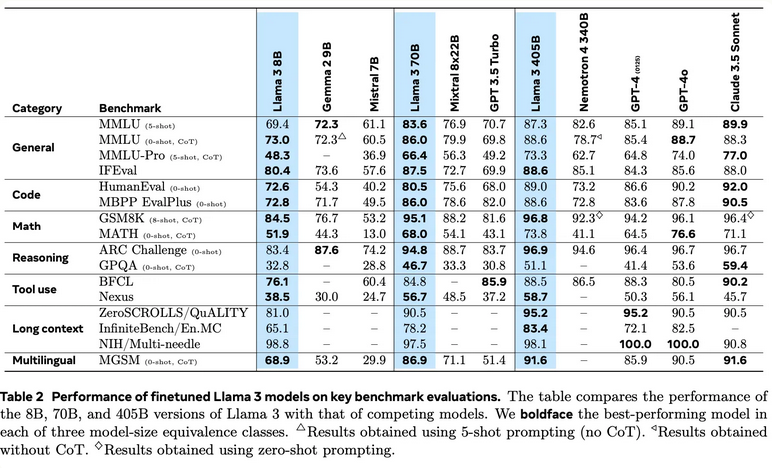

Figure 1: Comparison chart of AI Models

While the new Llama 3.1 models are currently rate-limited on Groq, the wait-time for answers still beat GPT, and I was extremely impressed by the responses that the 70B Versatile model provided. It understood the role I asked it to take on (that of a reference librarian) and managed to prefigure some of the questions I intended to ask it.

I wanted to see if the AI could help me isolate research topics for using AI in marketing campaigns, and then provide me with details for how to search for that material in various databases. The initial list of topics that the AI provided were interesting, and I asked Groq to drill down into one of them (the personalization of email campaigns).

The response was impressive, instantly delivering potential subtopics, research questions, and potential research methodologies to explore. After settling on one of the subtopics, I then asked the AI to provide me with keywords I could use in databases to search for existing research on this topic. Groq not only provided lists of keywords, but also provided keywords that would be database-specific, offered a recommendation to use Boolean search strings, and provided some example strings.

At that point, I asked Groq to expand on the Boolean topic, and it provided me with 10 increasingly complex strings that would allow me to search for a broad range of content in databases around the Internet.

Which left me wondering: what databases should I search through? So, naturally, I asked Groq that as well. Within moments, the tool spat out an impressive segmented list of databases split into discipline categories. I was extremely pleased to see multidiscipline open-access databases showing up in the list as well, ensuring that I would have research options even if I was unable to gain direct access to some of these databases through an institution or library.

Why AI tools matter to librarians...

AI tools are here to stay, one way or another. As we move forward, we need to keep two things in mind: Firstly, that the power and complexity of these tools is only just now beginning to be tapped into, meaning that librarians need to be rapidly educated in their use to be prepared for the upcoming AI era. Secondly, that we should be focusing on tools that best align with the ethics and responsibilities of library services, while making use of them to support the patrons we serve.

I chose Groq not simply because it outperforms leading private AI models, but because its parent company explicitly does not use user input to train the model, and likewise doesn't capture user data (outside of what is acquired through account creation). This aligns with ALA guidelines on privacy of user data, "Protecting user privacy and confidentiality has long been an integral part of the intellectual freedom mission of libraries."

Groq also operates on a different hardware architecture than other top-performing private models. This hardware, called "Language Processing Units" (LPUs) allow for the speed that makes Groq so impressive, but are also far less resource intensive (making them better for the planet and far cheaper to operate and use).

From these points, the ethics of tool choice become clear. But ethics need to be supported by usefulness, and the usefulness of the Groq tool (especially running the latest Llama models) can not be overstated. Groq took on its role well and answered questions with complex and correct responses that helped me think of my question in new ways. It also provided me with resources and methodologies that I was either unaware of, or else had forgotten. For reference staff, a tool like this can dramatically speed up the search for requested materials, and could potentially improve the overall experience of complex reference interviews. While a patron might not have the skills to properly engineer prompts for AI and thereby receive high-quality responses, reference staff have the training to both as the right questions and assess the value of the AI-generated outcomes. In this way, librarians can become facilitators for these AI tools, but can also use these tools to augment their own training and knowledge.

References

American Library Association. (n.d.). Library privacy guidelines for public access computers and networks. Retrieved August 3, 2024, from https://www.ala.org/advocacy/privacy/guidelines/public-access-computer